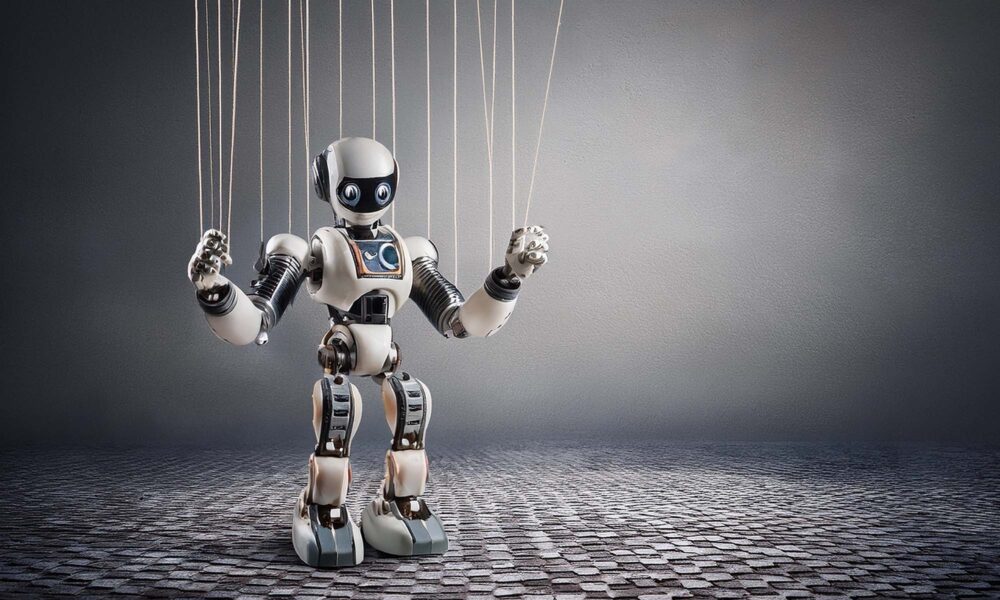

Explore the complexities of prompt injection in large language models. Discover whether complete safety from this vulnerability is achievable in...

Discover the impact of overreliance on connectivity on your home privacy. Gain insights into protecting your sensitive and personal information in a digital age.

Despite claiming otherwise, Big Tech still shares your data with third parties, and the only thing that can stop them is stricter regulations.

According to an IBM report, the cost of cybersecurity incidents in the Middle East reached a new high of $6.93 million per data breach in 2021.

The cybercriminals behind the attacks have a variety of different motives, from extorting money from gaming companies to causing reputation damage to preventing competing players from...

When it comes to subscription based VPN services, there are a few things to look out for when selecting a quality provider. This guide helps you...

Unless you’re dealing with an extremely sophisticated piece of malware, there are often obvious clues that your smartphone is under attack, or already compromised by hackers...