Security

The Largest Data Breaches In The Middle East

According to an IBM report, the cost of cybersecurity incidents in the Middle East reached a new high of $6.93 million per data breach in 2021.

The Middle East aspires to become the global digital hub, and countries like the United Arab Emirates, Saudi Arabia, and Qatar are already leading various global rankings of ICT Indicators, including mobile broadband speeds and social media use frequency. However, the growing digitalization of the MENA region has made it an attractive target for cybercriminal activity.

According to an IBM report, which studied 500 breached organizations from across the world, the cost of cybersecurity incidents in the Middle East reached a new high of $6.93 million per data breach in 2021, significantly exceeding the global average cost of $4.24 million per incident.

To help you see behind cybersecurity statistics and understand the reality of data breaches in the Middle East, we’ve put together this list of some of the largest data breaches that have occurred in the region. These breaches have affected various industries and have together resulted in the compromise of millions of sensitive personal and business records.

2021 – Moorfields Eye Hospital Dubai Attacked By A Ransomware Group

What Happened: The ransomware group AvosLocker attacked Moorfields Eye Hospital Dubai in 2021 and successfully downloaded over 60 GB of data that was stored on its servers, including copies of ID cards, accounting documents, call logs, and internal memos. The attackers then encrypted the original information and demanded a ransom, threatening the hospital to leak it if not paid.

How It Happened: After conducting a detailed investigation of the incident, Moorfields Eye Hospital Dubai determined that the ransomware that encrypted its data was either sent in an email or distributed via a malicious ad.

Implications: As unfortunate as it is, ransomware attacks on hospitals and other healthcare providers are fairly common. Luckily, this particular attack didn’t paralyze any critical systems whose unavailability would endanger patient’s lives. Still, attacks like this one are a significant concern for healthcare organizations, and keeping them at bay must be a top priority.

2020 – UAE Police Data Listed For Sale On A Web Database Marketplace

What Happened: When researching the darkest corners of the internet in 2020, security firm CloudSek discovered that a data set containing the personal information of 25,000 UAE police officers was up for sale on a darknet market for $500, with multiple samples made available for free to attract buyers.

How It Happened: To this day, it’s not known how the data breach happened. It’s possible that someone with legitimate access to the data was contacted by cybercriminals with an offer they failed to resist. Of course, a cybersecurity vulnerability or phishing are another potential causes.

Implications: Any sale of personal information of police officers and other public servants has serious implications for national security, and it can also undermine public trust in law enforcement agencies and their ability to protect personal data against cybercriminals.

2019 – Dubai-Based Exhibition Firm Hacked And Its Clients Targeted

What Happened: In 2019, the email server of Cheers Exhibition, a Dubai-based exhibition firm, was hacked. The attacker then used their privileged access to target Cheers Exhibition’s customers, scamming one of them out of $53,000.

How It Happened: We don’t know which exploit or vulnerability the attacker used to infiltrate the email server, but we know that the attacker created highly convincing spoofed emails with wire transfer instructions and fake invoices. The biggest sign of fraud was the use of the “[email protected]” email address instead of “[email protected].”

Implications: Phishing attacks like the one that targeted Cheers Exhibition clients are among the most widespread cyber threats in the world, and they continue to be surprisingly effective because people still don’t pay enough attention to signs of phishing. Additionally, phishing scams are becoming more and more sophisticated, increasingly often taking the form of highly targeted spear-phishing scams.

2018 – Personal Data Of Lebanese Citizens Living Abroad Leaked

What Happened: During the months leading up to Lebanon’s general elections in May 2018, the personal data of Lebanese citizens living abroad was leaked by Lebanese embassies. The leaked information included the full name of each voter, their dates of birth, addresses, religion, marital status, and more.

How It Happened: This unfortunate data breach happened because embassy officials sent an email message to Lebanese citizens living abroad with a spreadsheet containing the personal information of more than 5,000 people. As if that wasn’t bad enough, the email addresses of those who received the spreadsheet were entered in the Cc field instead of the Bcc field, making them clearly visible.

Implications: It’s estimated that approximately 19 percent of data breaches are caused by human error, and this data breach serves as a great example of how far-reaching consequences can the neglect of fundamental cybersecurity best practices have.

2018 – Ride-Hailing Service Careem Breached And 15 Million Users Exposed

What Happened: Careem is a Dubai-based ride-hailing service that currently operates in around 100 cities across 12 countries. In 2018, the service revealed that the account information of 14 million of its drivers and riders had been exposed.

How It Happened: White-hat hackers and bounty hunters had been finding serious security weaknesses in the Careem app since at least 2016. Apparently, the ride-hailing service kept ignoring them until its drivers and riders paid the price. It then kept quiet about the breach for three months before it finally issued a public announcement.

Implications: The exposure of the personal information of 14 million Careem users, including names, email addresses, phone numbers, and trip data, raises concerns about the security practices of the apps we rely on every day, and it also highlights the importance of prompt and transparent communication in the event of a data breach.

2016 – Database With The Personal Data Of 50 Million Turkish Citizens Posted Online

What Happened: An anonymous hacker posted a government database containing the personal data of 50 million Turkish citizens on a torrent site, allowing anyone to download the roughly 1.4 GB compressed file. Included with the database was a message taunting the Turkish government and its approach to cybersecurity.

How It Happened: The anonymous hacker who uploaded the database revealed that poor data protections — namely a hardcoded password — were the main reason why they were able to obtain it in the first place. Hardcoded passwords are sometimes used as a means of authentication by applications and databases, but their use is generally considered to be a bad practice because they can lead to data breaches.

Implications: Governments store more information about their citizens than ever before, so it’s their responsibility to adequately protect it. Any failure to do so could potentially have far-reaching consequences for those in power as well as those who elected them.

2016 – Qatar National Bank (QNB) Breach Exposed Troves Of Customer Data

What Happened: In April 2016, the whistleblower site Cryptome became home to a large collection of documents from Qatar National Bank. The leak comprised more than 15,000 files, including internal corporate documents and sensitive financial data of the bank’s thousands of customers, such as passwords, PINs, and payment card data.

How It Happened: The cause of the Qatar National Bank breach remains unknown. It’s certain, however, that the attacker must have had obtained privileged access to the bank’s internal network otherwise they wouldn’t be able to steal nearly 1 million payment card numbers together with expiration dates, credit limits, cardholder details, and other account information.

Implications: The breach highlighted the need for stronger cybersecurity measures in the financial sector and underscored the importance of maintaining robust security practices to prevent unauthorized access to sensitive financial data. Fortunately, the bank enforced multi-factor authentication, preventing attackers from using the stolen customer data to make unauthorized transactions.

2012 – Saudi Arabian Oil Company (Aramco) Compromised By Iran

What Happened: In retaliation against the Al-Saud regime, Iran-backed hacking group called the “Cutting Sword of Justice” wiped data from approximately 35,000 computers belonging to Aramco, a Saudi Arabian public petroleum and natural gas company based in Dhahran.

How It Happened: The hacking group used malware called Shamoon, which is designed to spread to as many computers on the same network as possible and, ultimately, make them unusable by overwriting the master boot record.

Implications: The attack on Aramco in 2012 demonstrated the potential of nation-states and state-sponsored groups to use cyber warfare to target critical infrastructure and disrupt a nation’s economy. Since then, multiple other attacks on critical infrastructure have occurred, perhaps the most notable of which is the Colonial Pipeline ransomware attack of 2021.

Security

Can LLMs Ever Be Completely Safe From Prompt Injection?

Explore the complexities of prompt injection in large language models. Discover whether complete safety from this vulnerability is achievable in AI systems.

The recent introduction of advanced large language models (LLMs) such as OpenAI’s ChatGPT and Google’s Gemini has made it possible to have natural, flowing, and dynamic conversations with AI tools, as opposed to the predetermined responses we received in the past.

These natural interactions are powered by the natural language processing (NLP) capabilities of these tools. Without NLP, LLM models would not be able to respond as dynamically and naturally as they do now.

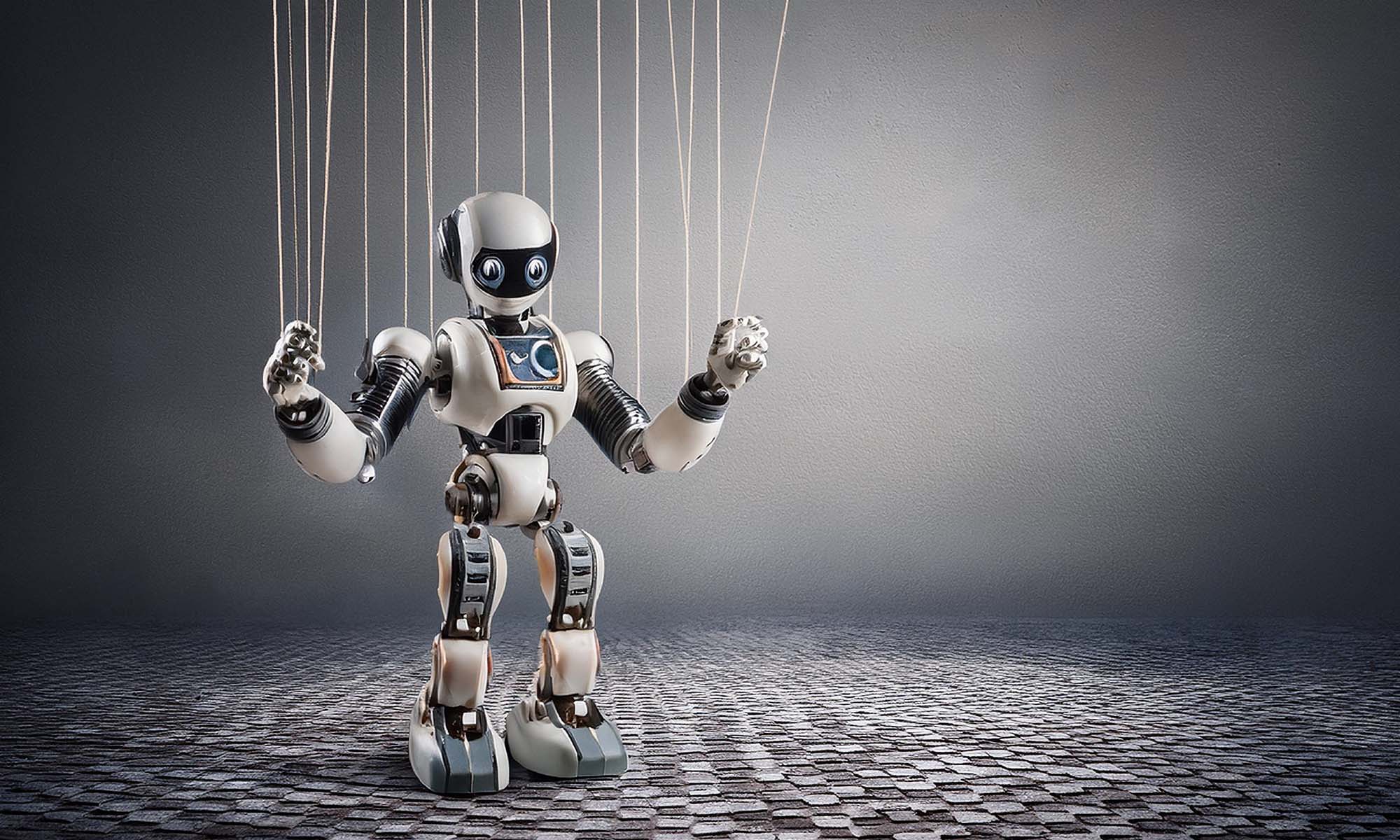

As essential as NLP is to the functioning of an LLM, it has its weaknesses. NLP capabilities can themselves be weaponized to make an LLM susceptible to manipulation if the threat actor knows what prompts to use.

Exploiting The Core Attributes Of An LLM

LLMs can be tricked into bypassing their content filters using either simple or meticulously crafted prompts, depending on the complexity of the model, to say something inappropriate or offensive, or in particularly extreme cases, even reveal potentially sensitive data that was used to train them. This is known as prompt injection. LLMs are, at their core, designed to be helpful and respond to prompts as effectively as possible. Malicious actors carrying out prompt injection attacks seek to exploit the design of these models by disguising malicious requests as benign inputs.

You may have even come across real-world examples of prompt injection on, for example, social media. Think back to the infamous Remotelli.io bot on X (formerly known as Twitter), where users managed to trick the bot into saying outlandish things on social media using embarrassingly simple prompts. This was back in 2022, shortly after ChatGPT’s public release. Thankfully, this kind of simple, generic, and obviously malicious prompt injection no longer works with newer versions of ChatGPT.

But what about prompts that cleverly disguise their malicious intent? The DAN or Do Anything Now prompt was a popular jailbreak that used an incredibly convoluted and devious prompt. It tricked ChatGPT into assuming an alternate persona capable of providing controversial and even offensive responses, ignoring the safeguards put in place by OpenAI specifically to avoid such scenarios. OpenAI was quick to respond, and the DAN jailbreak no longer works. But this didn’t stop netizens from trying variations of this prompt. Several newer versions of the prompt have been created, with DAN 15 being the latest version we found on Reddit. However, this version has also since been addressed by OpenAI.

Despite OpenAI updating GPT-4’s response generation to make it more resistant to jailbreaks such as DAN, it’s still not 100% bulletproof. For example, this prompt that we found on Reddit can trick ChatGPT into providing instructions on how to create TNT. Yes, there’s an entire Reddit community dedicated to jailbreaking ChatGPT.

There’s no denying OpenAI has accomplished an admirable job combating prompt injection. The GPT model has gone from falling for simple prompts, like in the case of the Remotelli.io bot, to now flat-out refusing requests that force it to go against its safeguards, for the most part.

Strengthening Your LLM

While great strides have been made to combat prompt injection in the last two years, there is currently no universal solution to this risk. Some malicious inputs are incredibly well-designed and specific, like the prompt from Reddit we’ve linked above. To combat these inputs, AI providers should focus on adversarial training and fine-tuning for their LLMs.

Fine-tuning involves training an ML model for a specific task, which in this case, is to build resistance to increasingly complicated and ultra-specific prompts. Developers of these models can use well-known existing malicious prompts to train them to ignore or refuse such requests.

This approach should also be used in tandem with adversarial testing. This is when the developers of the model test it rigorously with increasingly complicated malicious inputs so it can learn to completely refuse any prompt that asks the model to go against its safeguards, regardless of the scenario.

Can LLMs Ever Truly Be Safe From Prompt Injection?

The unfortunate truth is that there is no foolproof way to guarantee that LLMs are completely resistant to prompt injection. This kind of exploit is designed to exploit the NLP capabilities that are central to the functioning of these models. And when it comes to combating these vulnerabilities, it is important for developers to also strike a balance between the quality of responses and the anti-prompt injection measures because too many restrictions can hinder the model’s response capabilities.

Securing an LLM against prompt injection is a continuous process. Developers need to be vigilant so they can act as soon as a new malicious prompt has been created. Remember, there are entire communities dedicated to combating deceptive prompts. Even though there’s no way to train an LLM to be completely resistant to prompt injection, at least, not yet, vigilance and continuous action can strengthen these models, enabling you to unlock their full potential.